Above is the 1st Google Servers. The first iteration of Google production servers was built with inexpensive hardware and was designed to be very fault-tolerant.

In 2013, Google published it Datacenters as a Computer paper. http://www.morganclaypool.com/doi/pdf/10.2200/S00516ED2V01Y201306CAC024

A key part of this paper is discussion of hardware failure.

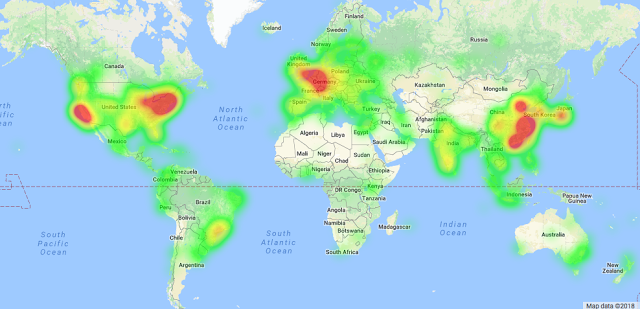

“1.6.6 HANDLING FAILURES

The sheer scale of WSCs requires that Internet services software tolerate relatively high component fault rates. Disk drives, for example, can exhibit annualized failure rates higher than 4% [123, 137]. Di erent deployments have reported between 1.2 and 16 average server-level restarts per year. With such high component failure rates, an application running across thousands of machines may need to react to failure conditions on an hourly basis. We expand on this topic further on Chapter 2, which describes the application domain, and Chapter 7, which deals with fault statistics.”

Google has come a long ways from using inexpensive hardware, but what has been carried forward is how to deal with failures.

Some may think 2 nodes in a system are required for high availability, but the smart ones know that you need 3 nodes and really want 5 nodes in the system.